Physicist, Tom Campbell did an experiment to prove we are living in a Simulation

Former NASA Scientist Doing Experiment to Prove We Live in a Simulation

From the article:

A former NASA physicist named Thomas Campbell has taken it upon himself to do just that. He devised several experiments, as detailed in a 2017 paper published in the journal The International Journal of Quantum Foundations, designed to detect if something is rendering the world around us like a video game.

Now, scientists at the California State Polytechnic University (CalPoly) have gotten started on the first experiment, putting Campbell's far-fetched hypothesis to the test.

And Campbell has set up an entire non-profit called Center for the Unification of Science and Consciousness (CUSAC) to fund these endeavors. The experiments are "expected to provide strong scientific evidence that we live in a computer-simulated virtual reality," according to a press release by the group

Here is a conventional, critical article

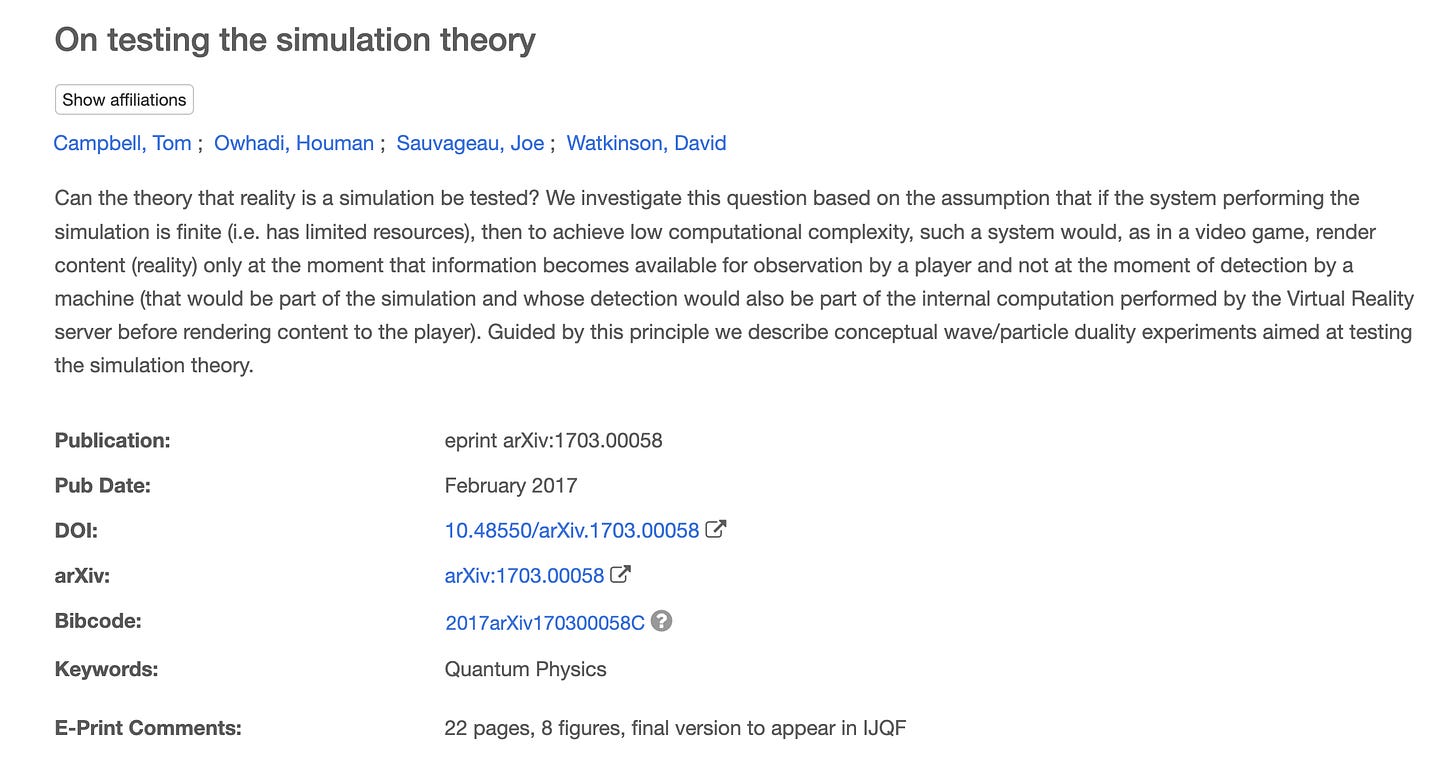

On Testing the Simulation Theory (2017), by Campbell, Owhadi, Sauvageau, Watkinson.

This is the conceptual framework paper. It describes how you could test the hypothesis via quantum experiments, especially wave/particle duality and delayed-choice set-ups. arXiv+2ADS+2

Comments by ChatGPT

But this paper itself does not present empirical results showing evidence one way or the other. It’s a proposal and design.

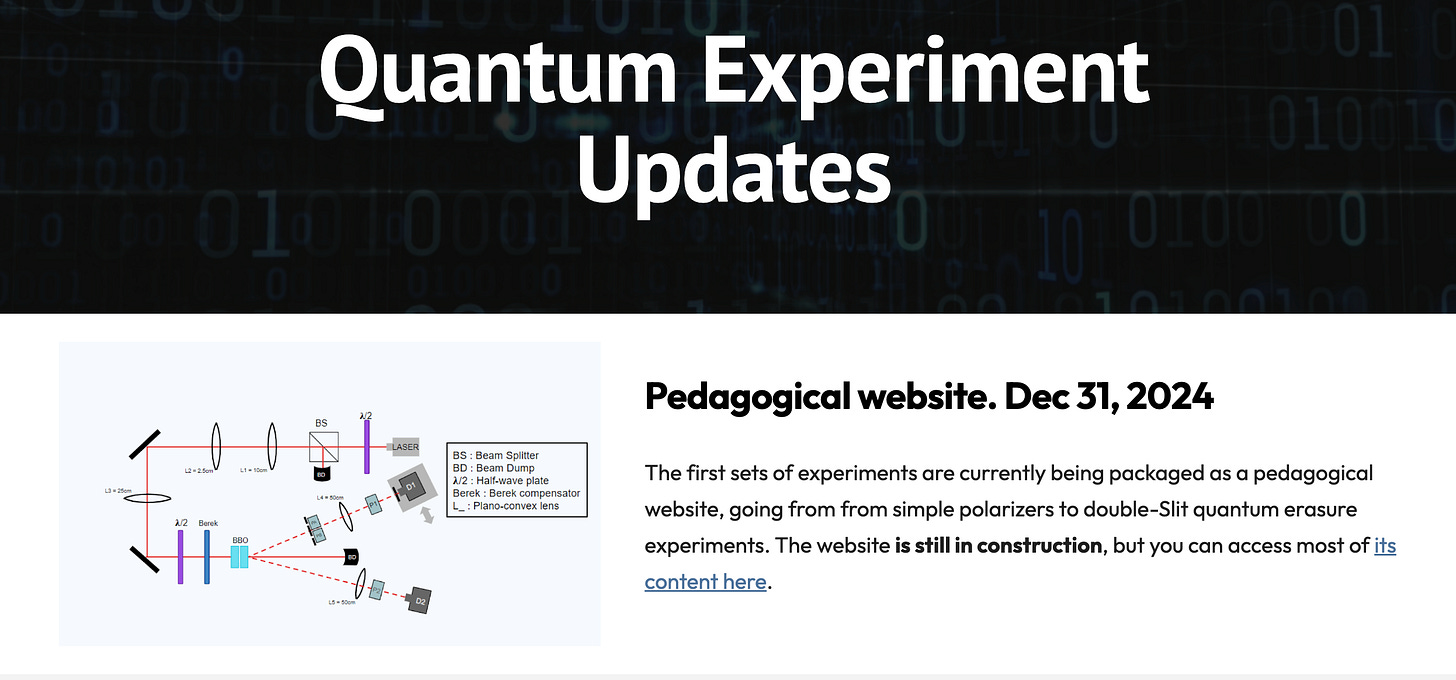

The CUSAC site (“Testing the Hypothesis”) lists experiments planned or underway, videos, updates, etc.

They provide “physics experiment updates” (e.g. December 2024) and progress reports

https://www.cusac.org/quantum-experiments-updates

An update from 2024

A critical review

“Testing the Simulation Hypothesis: The Annihilation of the Universe and Campbell’s Consciousness-Based Alternative” by Eliott Edge, which is on PhilArchive

https://philarchive.org/archive/EDGTTS

What Edge’s Paper Is About

Edge explores various forms of simulation theory, especially the ancestor simulation hypothesis (à la Nick Bostrom) and also the versions more closely aligned with Campbell’s model (where consciousness plays a central role in “rendering” reality).

He looks at what he calls simulation probes: experiments or theoretical situations meant to detect whether we are in a simulation. Some probes are from primary sources (Campbell’s paper, Beane, Vopson, Wittig, etc.)

He spends a lot of effort on what happens if we ever discover we’re in a simulation, or if we run certain experiments. Possibilities like “termination/shutdown of the simulation” are explored (like whether this might happen if we become too “savvy” or build ancestor simulations ourselves).

What Conclusions or Claims Does It Make?

No definitive empirical conclusion: The paper is philosophical/analytic, not reporting new experimental data. Edge doesn’t claim that any of the proposed experiments have yielded proof.

Philosophical cautions: Edge argues that many proposed simulation probes or experiments depend on assumptions that are either very uncertain or practically unknowable (e.g. assuming some agents outside the simulation, or that consciousness has a special role in collapse of possibilities).

He also discusses the risk-side: what if probing simulation theory has unintended consequences in the hypothesis framework (e.g. the “simulation being terminated”). He doesn’t assert that’s likely in any concrete sense, just that it’s often assumed in discussions and should be examined more carefully.

One conclusion is that the discourse often mixes up possibility, speculation, and scientific testability. Edge pushes for clarity: what are knowable, what are assumptions, and what is speculative but usable for philosophical reflection.

How It Connects to Campbell’s Model

Edge treats Campbell’s proposal (from “On Testing the Simulation Theory”) as a serious example of what he calls a “consciousness-based simulation theory.” He uses the probe experiments Campbell proposes as central examples.

The concerns Edge raises — about assumptions (e.g. that consciousness by itself collapses possibilities, or that experiments can distinguish human observation from mechanical measurement) — align with many of the critiques of Campbell’s proposals.

Here is an appreciative article

Do We Live in a Simulation?

Part 2: Consciousness, Code, and the Campbell Hypothesis

Rohan Roberts

Aug 27, 2025

If the first part of this inquiry opened the door to the tantalising possibility that we may be living inside a simulation, Part 2 ventures further down the rabbit hole — not toward dystopian sci-fi, but into a scientifically rigorous, metaphysically daring framework proposed by physicist and consciousness researcher Tom Campbell.

Campbell doesn’t just argue that we live in a simulation — he explains why, how, and what it means. His “Big TOE” (Theory of Everything) attempts to do what grand scientific theories rarely attempt anymore: unify physics, philosophy, and the personal experience of being conscious.

So what does his theory actually propose?

In Campbell’s framework, consciousness is not a byproduct of the brain. It is not emergent. It is not derivative. Rather, it is primary. Everything we experience — time, space, matter, energy — is rendered within consciousness, much like pixels on a screen.

You’re not a brain generating thoughts. You’re a unit of awareness receiving a data stream.

Campbell builds on ideas from quantum physics and information theory to argue that our physical universe is a virtual reality — computed by a larger consciousness system (LCS). This “system” runs the simulation not unlike how a game engine renders a digital world for a player. The Big Bang? A digital “run” button, pressed with a specific rule set and initial conditions.

We — humans, animals, perhaps even atoms — are avatars within this rendered simulation. The consciousness you call “you” isn’t in the world. It’s logged in.

But what’s the point of logging in? If consciousness is the player behind the avatar, then the goal of the game — the simulation — must be some form of progress or evolution.

In Campbell’s framework, consciousness is an information system, and like any such system, it evolves by reducing entropy — that is, by increasing order, structure, and efficiency. This isn’t metaphorical. In information theory, entropy refers to uncertainty or randomness in a system. A system that can process information coherently, anticipate patterns, and respond with adaptive complexity is considered lower entropy — and thus more evolved.

Applied to conscious agents, this translates into behavioural optimisation. Decisions that enhance stability, predictability, and cooperation contribute to a more ordered system. In contrast, choices driven by fear, isolation, or self-interest tend to fragment the system — introducing noise, conflict, and inefficiency.

Campbell argues that in social and experiential terms, low-entropy behaviour manifests as pro-social, ethical decision-making. Not because morality is coded into the simulation, but because such behaviours promote coherence within and across conscious agents. Over time, systems that reduce internal conflict and increase mutual understanding are more adaptive — and thus more capable of evolving.

So when Campbell speaks of “becoming love,” he isn’t invoking sentimentality — he’s using a systems-level term to describe a state of optimal relational function: high fidelity information exchange, mutual reinforcement, and minimal internal contradiction.

It’s less about romance. More about information theory.

According to the model, each of us is an Individuated Unit of Consciousness (IUOC). We’re like conscious “players” participating in this simulation to gain experience, evolve, and make meaningful choices.

Life isn’t a random accident. It’s a learning simulator. Not unlike a pilot in a flight simulator, we’re here to practice, crash, grow, and try again — until we become better, more coherent, more loving expressions of consciousness.

Campbell is not dismissing science. In fact, he’s a former NASA physicist who believes quantum mechanics and relativity are best explained as features of a computed reality. Why is the speed of light constant? Why do particles behave like probabilities? Why do we encounter paradoxes in physics?

Because we’re not studying “matter.” We’re studying rules of a simulation — and those rules make perfect sense when viewed through the lens of information theory and consciousness processing.

Unlike many spiritual or metaphysical frameworks, Campbell insists that his theory is testable. He has proposed experiments based on quantum randomness, double-slit interference, and virtual rendering delay that could potentially falsify or support his model.

To be clear, the scientific community remains sceptical, and his work hasn’t yet been peer-reviewed at scale — but that hasn’t stopped a growing number of thinkers, physicists, and philosophers from exploring its implications.

So… Are We in a Simulation?

If you ask Tom Campbell, the answer is yes — but it’s not a prison. It’s a playground for the soul. A challenge course. A classroom. A mirror.

Whether one agrees or not, the implications are profound. If consciousness is fundamental, if love is evolutionary, if physics is the interface and not the substance — then the universe isn’t just a cold machine. It’s a meaningful, participatory, creative space.

The code isn’t just binary.

It may be embedded in cooperative complexity. In other words, the source code, it seems, might just be love.

A discussion with Chat GPT

The AI seems to almost go out of its way to agree with Tom Campbell and other thinkers.

What does Tom Campbell mean by point attention?

Tom Campbell (author of My Big TOE) often talks about how consciousness directs its “point of attention.” By this he means:

Consciousness is a chooser. At any given moment, your awareness can focus on one particular thing — a thought, sensation, image, or external perception — or it can diffuse and take in a broader field.

Point attention = focused awareness. It’s like a flashlight beam. When you narrow it down, you concentrate on one thing in detail (for example, a pain in your knee, a word in a book, or a visual in meditation).

Field attention = diffuse awareness. Instead of focusing tightly, you can widen your awareness to notice “the whole room,” the overall flow of experience, or the sense of simply being.

Campbell uses this concept both in everyday terms and in his model of consciousness:

In daily life, point attention explains how you get “lost” in thought or pulled into distractions.

In meditation or altered states, training your point of attention helps you hold steady focus (for instance, on the breath, an intent, or accessing data streams beyond the physical).

At a bigger-picture level, it reflects that consciousness is information processing: you only render in detail what you focus your point of attention on, much like a virtual reality game only fully renders what’s in the player’s field of view.

So when Campbell says “point attention,” he’s talking about the act of consciousness focusing narrowly on one data stream or object, to the exclusion of others.

What does tom Campbell say about the "rule set" within the simulation - why things appear to be solid, the laws of physics etc?

Campbell talks about this a lot in My Big TOE. Here’s a clear, compact breakdown of what he means by the “rule set” of the simulation and why the world looks solid and lawful.

The rule set = the simulation’s programming

Rule set = the information-processing rules that govern how the virtual reality (our 3D/physical world) renders, updates, and shares experiences between conscious entities. It’s like the software that decides what happens when things interact.

Those rules aren’t arbitrary: they’re chosen (or evolved) by the Larger Consciousness System (LCS) to produce a stable, learnable environment that optimizes for reduction of entropy (i.e., learning, evolution, and growth of consciousness).

Why things appear solid

Solidness = high probability, stable patterns of information. “Solidity” is not metaphysical substance but a stable, high-probability pattern produced by the rule set. When many possible states converge to the same outcome repeatedly, that outcome is experienced as solid and persistent.

Rendering and attention. The simulation only “renders” detail where necessary (analogous to level-of-detail in VR). When point-attention (or measurement) focuses on something, the system collapses probability into a definite state. Where attention is diffuse, behavior can look probabilistic or fuzzy (this is Campbell’s way of explaining quantum indeterminacy).

Collision rules / interaction laws. The rule set contains deterministic/ probabilistic interaction rules (how two objects “collide,” transfer momentum/energy, etc.), so macroscopic behavior looks consistent and predictable.

Why the laws of physics exist

Laws are consistent constraints for shared experience. To have a consensual, learnable reality the system enforces consistent constraints (conservation laws, causality, locality-ish rules). Those constraints make outcomes repeatable so agents can learn cause–effect relationships.

Emergence, not fundamental fiat. Physical laws are emergent regularities produced by the rule set chosen to balance richness (space to explore) and stability (a world that can teach reliably). Campbell treats things like conservation of energy, inertia, etc., as rules built into the VR to create predictable consequences for actions.

Quantum strangeness as low-resolution / statistical behavior. At the micro level the system uses probabilistic sampling; weirdness (superposition, nonlocal correlations, collapse on observation) is natural when you think of reality as information with limited bandwidth and rendering priorities.

Important related ideas Campbell uses

LCS goals: The underlying system’s objective is to maximize evolution of consciousness (learning, lowering entropy). The rule set is tuned for that objective.

Bandwidth & resolution trade-offs: The simulation must economize processing, so it uses probabilistic sampling, delayed rendering, approximations — resulting in apparent randomness or “noise.”

Measurement/attention as selection: Conscious intent or attention biases the probabilities; measurement collapses possibilities into the observed outcome.

Free will vs. rules: Agents have choice within the constraints — the rules set the playground, but choices (information-processing decisions) are made by conscious beings that influence the outcome.

Putting it simply

Think of our universe as a multiplayer VR whose engine runs on information-processing rules chosen to make a stable, learnable game. “Solidity” and the laws of physics are simply reliable, high-probability patterns produced by that engine so players (conscious entities) can learn, make choices, and evolve.

David Icke’s view - the only limit to the Simulaton is the speed of light

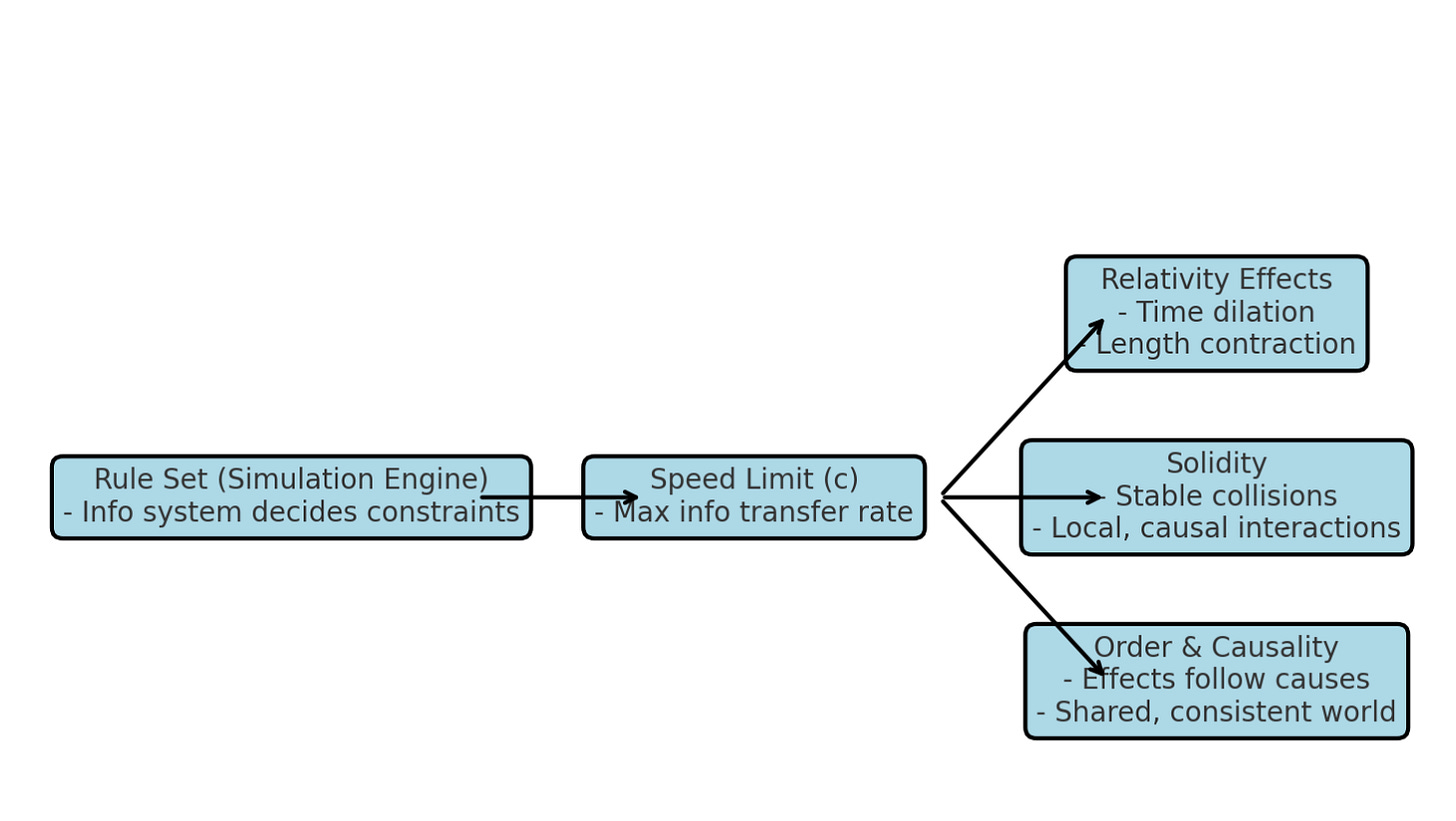

Short answer: that’s a reasonable — and common — way to put it. Both David Icke and thinkers like Tom Campbell treat the speed of light (c) as one of the rule-set constraints that govern how our rendered, shared “virtual” reality behaves. But they frame and explain it slightly differently. Here’s a clear comparison and what it implies.

What Icke means by “the limitation is the speed of light”

When Icke says the simulation is limited by the speed of light, he’s highlighting that c functions as a hard cap on how fast information, influence, or causal effects can travel inside the simulation.

In simulation-language: c = the engine’s maximum data/communication rate for in-world events, so nothing inside the rendered world can outrun that limit.

Quotes

“The speed of light is like a firewall. … Once you go beyond that speed you leave the Matrix …” (reported from fan source, Human Race Get Off Your Knees, Ch. 19)

• “The speed of light … is the fastest speed that matter can travel before leaving the frequency range of the Matrix.” (paraphrase, same source)

• “Our world … is only one frequency range in an infinite number sharing the same space.”

(David Icke’s frequency/band metaphor for detectable reality)

How Tom Campbell would (and does) see it

Campbell treats things like the speed of light as part of the rule set — i.e., one of the built-in constraints the Larger Consciousness System (LCS) uses to create a stable, learnable environment.

In his terms, c is a rule that governs information transfer and interactions so agents can reliably learn cause/effect. It’s not a metaphysical absolute outside the simulation; it’s a design parameter of this particular reality.

Why a speed limit matters in a simulated/VR model

Causality and order: A finite maximum speed prevents instantaneous action-at-a-distance and preserves a consistent ordering of events — necessary for a sensible, repeatable learning environment.

Bandwidth & processing economics: If the engine must share state among many players, a maximum transfer rate helps the system economize and decide what to render in detail.

Emergent relativity effects: Time dilation and length contraction (relativity) can be seen as natural consequences of how the engine handles moving reference frames under that speed cap — they’re “rendering rules” that maintain internal consistency.

Perceived solidity & locality: Limits on information transfer help make interactions local and predictable, so “solid” objects and conserved quantities behave reliably.

Quantum weirdness vs. a light-speed limit

The existence of a finite c doesn’t contradict micro-level weirdness. In Campbell’s view, quantum oddities (superposition, collapse, apparent nonlocal correlations) reflect low-resolution/probabilistic sampling and LCS-level processes. The simulated layer enforces locality and a speed cap for rendered macroscopic outcomes while the deeper information system can correlate outcomes in ways that look nonlocal from inside the simulation.

Important implications / ways to think about it

Parameter, not metaphysical prison: If this is a designed information system, then constants like c are parameters the system designers chose to create a balanced learning arena. Another universe/rule-set could have a different limit (in principle).

Useful metaphor for science and mysticism: Saying “the limitation is c” maps nicely onto physics (c is a physical constant) and onto the simulation metaphor (a processing/communication limit). Both languages point at the same functional role.

Not a proof either way: Treating c as a simulation limit is an interpretive layer applied to empirical facts (the measured constancy of c); it doesn’t prove the simulation hypothesis, but it offers an explanatory framing.

Here’s a simple thought experiment that shows how a speed-of-light rule acts as a simulation limit and creates the world we experience.

Imagine a Multiplayer VR World

The “game engine” is the Larger Consciousness System (LCS).

Each player (you, me, etc.) gets data streamed to their headset (our brains).

To keep things consistent, the engine must update everyone’s world state in sync.

Step 1 — No Speed Limit

Suppose the engine allowed instant information transfer.

You throw a ball. Everyone in the VR sees it move immediately, no matter how far away they are.

No lag, no delay, no ordering problem.

Sounds fine — but…

Actions at opposite ends of the world could overlap in strange ways.

Causality (cause before effect) would break down.

Learning in such a reality would be nearly impossible, because consequences wouldn’t flow in a stable sequence.

Step 2 — Impose a Limit (c)

Now the system sets a maximum update speed: say 300,000 km/s (the “speed of light”).

You throw a ball.

Your friend 10 meters away sees it almost instantly.

Someone on the Moon sees it about 1.3 seconds later (because the signal “in-game” can’t travel faster than c).

Suddenly the simulation has order. Effects don’t outpace causes.

Step 3 — Emergent Physics

With a fixed update speed:

Time dilation emerges: if you move close to the update speed, your local “clock” (rate of updates relative to the world) slows compared to others.

Length contraction emerges: objects moving near the update speed appear compressed, because rendering rules must fit updates consistently.

Conservation laws make sense: energy and momentum behave as if they’re conserved, because updates are constrained by the speed cap.

So relativity isn’t a “thing in itself” — it’s what naturally happens in a VR system with a hard bandwidth limit.

Step 4 — Solidity

When two avatars bump into each other, the system doesn’t render them “passing through” — it applies collision rules that prevent overlapping beyond probability tolerance. The c-limit ensures those collision calculations stay local and stable, reinforcing the appearance of solidity.

👉 In short:

The speed of light is the processing limit of the simulation. It enforces causality, order, and stable interactions. Without it, the VR would be chaotic. With it, you get relativity, solidity, and the predictable physics we experience.

Here’s the diagram that maps it out visually:

Rule Set → Speed of Light Limit → Relativity, Solidity, and Order

It shows how the simulation’s constraints cascade into the physics we experience.

Would you like me to also add quantum effects (like probability collapse and nonlocality) into the same chain, so it shows both the macro (relativity/solidity) and the micro (quantum oddities) arising from the rule set?

Here is a discussion of the article, Former NASA Scientist Doing Experiment to Prove We Live in a Simulation

What the Reddit post says (very briefly)

The post links a Futurism news item saying Tom (Thomas) Campbell — the former NASA physicist/author of My Big TOE — published a 2017 paper proposing quantum experiments to test whether reality is “rendered” like a video game, and that a team (including researchers at CalPoly) has begun running one of the experiments. The thread contains a mix of enthusiasm, scepticism, and debate about whether “observation = consciousness.” Reddit+1

The primary sources / facts

The conceptual paper is publicly available: “On testing the simulation theory” (Campbell et al., 2017) (also on arXiv / IJQF). It frames tests based on wave–particle duality and limited-resource/rendering assumptions. arXiv+1

Campbell and his nonprofit (CUSAC / Testing the Hypothesis) are publicly posting experiment plans, videos, and updates. They describe a series of wave–particle duality experiments and claim teams are attempting them. Testing the Hypothes+1

Popular science outlets picked up the story and emphasized how controversial it is — there’s both media interest and mainstream scepticism. IFLScience+1

What the experiments aim to do (high level)

Campbell’s idea (in short): if the system running our reality economizes resources (like a game engine), it might only render definite outcomes when information becomes available to an observer/player. He proposes quantum-style experiments where the timing/availability of which-path information is manipulated to look for differences between machine-only measurement and human/observer-related effects. The presence (or absence) of rendering artefacts would be evidence consistent with a limited-resource simulation model. arXiv+1

Caveats & mainstream view

These proposals are not widely accepted as conclusive tests of a simulation. Many physicists say quantum measurement effects can be explained by normal quantum theory (interaction, decoherence, entanglement) without invoking consciousness or an external renderer. The interpretation and experimental design are debated. IFLScience

Even a positive experimental anomaly wouldn’t prove we’re in a simulation — it would only show something unexpected about how information/measurement behaves, which would require careful, replicable follow-ups and alternative explanations to be ruled out.

Here is a summary of the article, Do We Live in a Simulation?

Quick summary (what the Medium piece argues)

The piece is a short, accessible exposition of Tom Campbell’s “Big TOE”: consciousness is primary, the physical world is rendered information, and what we call physics are rules of that rendering.

Campbell’s LCS (Larger Consciousness System) runs a simulation-like environment so individuated units of consciousness (IUOCs) can learn by reducing informational entropy — i.e., becoming more ordered/cooperative (what he sometimes calls “becoming love”).

The author emphasizes Campbell’s claim that the model is scientifically testable (double-slit / rendering-delay style experiments) and frames constants like the speed of light and quantum probabilities as features of an information-processing rule set.

The tone: the simulation framing is presented not as dystopian but as a meaningful learning environment — the metaphysical implications are given an ethical spin (pro-social behaviour lowers entropy).

How this ties to what we talked about

The article echoes exactly the idea we sketched: rule set → speed limit (c) → stable physics (relativity, solidity), and it adds Campbell’s systems / information-theory rationale for why those rules were chosen (to make evolution/learning possible).

It also repeats Campbell’s claim that the hypothesis is open to empirical testing (which is why people on Reddit and in the experiment community are excited).

Quotes from Tom Cambell:

On the basic idea of a testable simulation hypothesis:

“The possibility of our physical world being a virtual reality created by information computed elsewhere is a scientific hypothesis that can be tested.”

On the role of physics experiments:

“If our physical reality is the result of a digital simulation, then physical processes should reveal signatures of that digital processing.”

On why the double-slit experiment matters:

“The double-slit experiment with which-way detectors is a critical test because it offers a way to discriminate between interpretations of quantum mechanics and predictions of the virtual reality hypothesis.”

On the prediction itself:

“If the availability of which-way information is what matters, then whether or not a conscious observer looks at the data is irrelevant. If, however, reality is computed only as needed for an observer, then the presence of a conscious observer makes a measurable difference.”

The double-slit experiment

What Campbell says / proposes

From My Big TOE (and associated “Testing the Hypothesis” materials):

Campbell describes a series of modified wave/particle duality experiments (i.e. variations of the double-slit experiment) intended to test whether physical reality behaves like a simulation. My Big TOE+1

The idea is that if reality is simulated, then whether or not “which-way” information is available but unused (or erased) may affect whether an interference pattern appears. That is, not just whether the path is measured, but whether the information is ever observed or remains recorded. My Big TOE+1

From Testing the Hypothesis:

“Guided by these assumptions, the experiments describe variations of the universally accepted wave/particle duality experiment (double-slit experiment). When successfully performed, they will test whether our currently accepted concept of physical reality will respond as if it were a simulation (virtual reality).” Testing the Hypothes

Also, Campbell suggests that if data is recorded but then destroyed before it's ever observed, that destruction may matter — i.e. no observer ever sees the which-way info → possibly restoring the interference pattern. (This is one of the variations in his proposed experimental set.) Testing the Hypothes+1

What standard quantum experiments show vs Campbell’s hypothesis

To contrast:

In standard quantum mechanics / double-slit experiments:

• When no which-way detection is present → interference wave pattern.

• When which-way detectors are in place (i.e. path information is available) → interference disappears; particles behave like particles.Many “quantum eraser” experiments show that even if the which-way information is “erased” after detection (or made unavailable), interference can re-emerge in certain correlations. This is part of the canon. Campbell’s variants build on this idea, but emphasize the role of conscious observer / actual observation as potentially critical.

I shall post this seperately

https://www.testingthehypothesis.com/experiments?utm_source=chatgpt.com

Quotes / paraphrased statements to use

“Variations of the universally accepted wave/particle duality experiment (double-slit experiment) … will test whether our currently accepted concept of physical reality will respond as if it were a simulation (virtual reality).”

— Campbell - Testing the Hypothesis project page. Testing the Hypothes

“If reality does respond to these experiments as only a simulation … then the logical conclusion is that our reality is, in fact, a simulation (virtual reality).”

— Campbell / My Big TOE experiments page.